Abstract

In recent years, virtual production has seen its largest growth in popularity in the film industry. The demand for faster and cheaper productions, the continuous innovations in hardware and real-time rendering, the globalization of virtual and augmented reality and the most recent need for remote collaboration are all driving factors for its consolidation in the film production lifecycle. Although the benefits of a virtual production approach have an impact on many departments, the compositing and visual effects department is one of the most affected.

This paper explores the evolution of compositing technologies and describes why virtual production is a natural fit. We also explore how compositing workflows today are being influenced by virtual production and discuss the prospects of compositing techniques.

Keywords: Virtual Production, Chroma Key, Visual Effects, Cinematography, Real-time Render

Introduction

It was the year of 1898 and the pioneering British filmmaker George Albert Smith had just discovered a way of introducing elements to film clips through double exposure, according to a historical review of the filmmaker’s works (Leeder 2017, 67-95). From this historical mark onward, the film industry would witness a gradual development of visual effects techniques, starting with double-exposure mattes, then black mattes and traveling mattes. Some resorting to optical printers or ultraviolet lights, others to sodium vapour, and others creating perspective illusions to produce similar results. During the 60’s, P. Vlahos created a solution named colour difference travelling matte, patented by Motion Picture (Vlahos 1964), which resembles the technology still used today. The following years would be marked by the growth of computers and the transition of purely practical background replacement and object insertion techniques in film to computer-aided procedures based on what is called chroma keying or green screen effect.

The film industry has taken great advantage of the chroma keying technique, and the presence of green screens is, at this point, ubiquitous, from the large blockbusters to the lowest-budget home-made videos. However, in more recent years, new technologies are raising the question whether green screens will eventually become a thing of the past. A new background replacement technique has been given the name of virtual production, although the term might refer to other film-production-related subjects, and it essentially consists of a method that uses a suite of software tools to combine live action footage and computer graphics in real-time, generally through large stages with LED walls creating a sophisticated virtual filmmaking environment. But, taking a closer look at what virtual production is and how it is being used, one understands that this innovation is not a replacement for chroma key, but rather a logic step in the constant evolution of the film industry with broader impacts that influence the entire production workflow.

The current work aims at providing a perspective on the use of virtual technologies and their impact on modern cinematography. Unlike other works that maintain a global perspective, we intend to focus on the creation of visual effects and the performing of compositing tasks resorting to virtual production. The reader is expected to understand why the shift towards virtual environments is a natural step forward in film, how traditional compositing techniques cohabit with the virtual production paradigm and what might be expected to occur in future years.

State-of-the-Art

The process through which two or more images are combined to make the appearance of a single picture is called compositing. Compositing is one of the most prevalent techniques in the visual arts, especially in cinematography, as it allows the creation of imaginary worlds based on multiple live action shots, fully computer-generated imagery or a fusion of both. The composite process can be done on-set and in-camera or during post-production, and it encompasses a wide range of advanced filmmaking techniques.

Practical Techniques

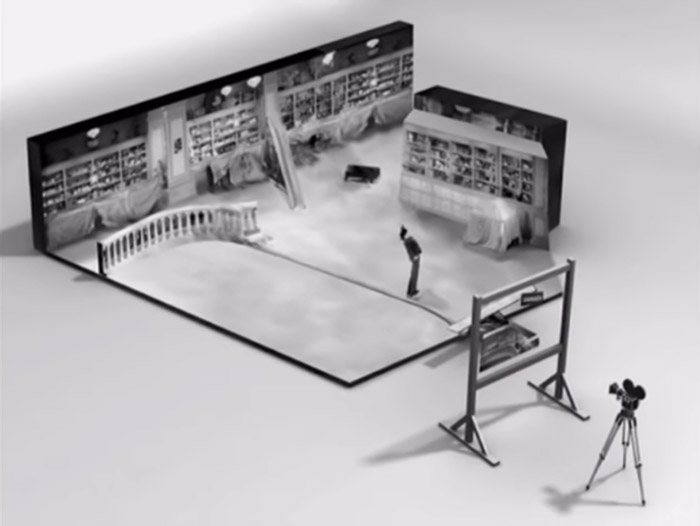

The most practical of compositing techniques is called a physical composite and, in it, the separate parts of the image are placed together in the photographic frame and recorded in a single exposure. A perfect usage example is the depth illusions seen in many of Charles Chaplin’s films (Modern Times 1936). Image 1 provides a graphical representation of the composite. A variation of the physical composite is the background projection. In this case, the separate parts are also recorded in a single exposure, but the background consists of an image or video projection onto a wall. This technique is still used today, sometimes resorting to virtual production for greater realism as it discussed ahead.

Image 1 – Physical compositing (Modern Times 1936).

Although the physical composite resorting to painted glasses such as the one seen above can be considered a matte technique, as it is a way of masking different parts of the camera frame, matte shots usually refer to the manipulation of the film itself, either mechanically or digitally. Originally, the matte shot was created by obscuring the background section on the film with cut-out cards. When the foreground live action portion of a scene was filmed, the background section was not exposed. Then, a different cut-out would be placed over the live action section, the film would be rewound, and the filmmakers would film their new background. This technique was known as the in-camera matte. Multiple developments followed for greater image stabilization and original exposure backup preservation (Fry et al. 1977, 22-23), but one major drawback of such initial mattes was that matte lines were stationary.

The travelling matte allowed filmmakers to overcome the stationary limitation by making it possible for the matte line to be changed every frame. Through a very time-consuming per-frame hand process, live action portions could be matte themselves, allowing filmmakers to move the actors around the background and scene and thus integrating them completely. But masking portions of the photographic frame was not reserved only for background and foreground compositing. This multiple exposure technique has been used ever since its creation for complex scenes containing several matte elements.

The masking process became more fluid with the introduction of the colour difference travelling matte, patented by Motion Picture (1964), with the manual process being replaced by mask selection according to a well-defined colour difference in the captured scene. This method marked the integration of computer technology in the visual effects industry.

Computer-Aided Techniques

Digital matting has replaced the traditional approaches for numerous reasons. When done correctly, digital matting is very precise, whereas previous methods could drift slightly out of registration, resulting in halos and other edge artifacts in the final film. Also, the output of previous methods was a result of multiple film copies, and each time a copy was made, the film would lose quality. Digital images can be copied without quality loss. Moreover, the use of blue or green screens as backgrounds to isolate targets with the digital extraction of such targets allowed for a faster and more accurate masking. Image 2 presents the before and after shots of a chroma key scene directed by the sisters Wachowski (The Matrix 1999).

The chroma key technique is the process by which a specific colour is removed from an image or image sequence, allowing the removed portions to be replaced. Any solid colour can be used as a background, but there are a number of reasons why green and blue are used most often: neither green nor blue is present in human skin tones, and people are the most common keyed subjects; green and blue also have a higher luminosity than other colours, meaning they register more brightly on digital cameras naturally. The choice of colour is done to avoid colour overlap between the screen and the subjects in frame.

Image 2 – Chroma-key compositing (The Matrix 1999).

Although the technique is simple in theory, achieving professional quality extractions requires additional efforts. First, the cast of shadows on the solid colour requires an extraction within a colour range rather than a single-colour value (different tones of green, for example). Also, large stages usually cast what is called a colour spill onto the subjects being keyed. This spill can cause information loss when removing the background, so several spill compensation techniques must be executed. Finally, there are some situations where the stage is populated with trackers or other elements of different colours, as seen in Image 2. In these situations, such elements must be removed manually, sometimes frame by frame. Here we enter the realm of rotoscoping.

Rotoscoping is an animation technique that involves tracing over live-action footage frame by frame, which produces graphic assets for both animated and live-action productions. The usage of rotoscoping can be traced back to the beginning of the 20th century, when animator Max Fleischer used it to create more realistic animations with fluid, life-like motions (Fleischer 1915). When it comes to rotoscoping applied to live action shots, the process consists of creating masks for portions of an image to extract elements. This technique offers valuable advantages such as not requiring the usage of background planes like chroma keying and being applicable in virtually any scenario. However, rotoscope tasks are very time consuming and require highly skilled artists. Recent tools are capable of partially automating tasks by tracking visual elements, but manual work is always required.

One other computer-aided technique used both in animation and live-action productions is motion capture. Motion capture is the process of tracking the subtleties of live movement and processing this information either live or through recording. Simply put by A. Menache, it is the “creation of a 3D representation of a live performance” (Menache 2000). Like rotoscoping, motion capture belongs to a broader group of motion tracking techniques. It is considered a compositing technique because it integrates live performances in fully animated productions or live action films through computer generated images. This type of motion tracking has been used in cinema for over 50 years and can be achieved through multiple tracking technologies, namely mechanical, optical, magnetic, acoustic and inertial trackers, but the way each works is out of scope for this study.

When capturing performances of real-life animated elements such as actors, these are usually equipped with small trackers that will gather spatial data throughout time. Image 3 presents a recent use case (The Hobbit 2012). However, tracking can also be achieved through image processing or depth data processing, although usually not with as high precision. Instead of tracking the movement of targets in frame, there is also interest in tracking the camera’s movement to map it to virtual cameras and more easily integrate computer generated images with recorded live action.

Image 3 – Motion capture (The Hobbit 2012).

Real-Time Techniques

In the history of cinematography, there has been a desire to immerse the audience in the story through various audiovisual approaches. Compositing techniques provide powerful means to do so, and their development over the years can be translated into an increase in photorealism and an empowerment of the filmmaker as a storyteller. By using techniques such as those presented so far and taking advantage of the ever-increasing processing capacity of computers, filmmakers have achieved unprecedent levels of visual quality and composite works that cannot be distinguished from actual footage.

However, the production of visual masterpieces usually involves time-consuming renders, where the final images are produced after the application of several layers of computationally intensive tasks that can take from a few hours to days to be completed. This brings many challenges to producers: when filming live action footage, the level of uncertainty of how shots will look like when integrated with other visual elements is high; render times in post-production must be managed and changes applied at this stage are very expensive. Although traditional forms of previsualization can be adopted, production stages feel disconnected, and the process doesn’t resolve itself until all shots are finalized.

Here is where state-of-the-art virtual production and real-time compositing techniques excel. As stated by N. Kadner on ‘The Virtual Production Field Guide’ by Epic Games (Kadner 2021, 7-8), “In contrast to traditional production techniques, virtual production encourages a more iterative, nonlinear, and collaborative process. (...) With a real-time engine, high-quality imagery can be produced from the outset. (…) Assets are cross-compatible and usable from previsualization through final outputs.”. By adopting a virtual approach and seizing the real-time rendering capabilities of today’s advanced game engines, faster visual feedback can be provided to filmmakers, or the final compositing can even be done on-set while capturing actors’ performances.

Hybrid virtual production is the term used to describe the use of camera tracking to composite green screen cinematography with computer generated elements. Adopting this is meant to provide live references for filmmakers to gain a better spatial understanding of virtual elements such as computer-generated characters and set extensions, allowing visual effects in post-production to be better integrated. Recent films and series have adopted advanced versions of this technique where the live composite is typically at a proxy resolution. Image 4 presents a usage example of the hybrid virtual production.

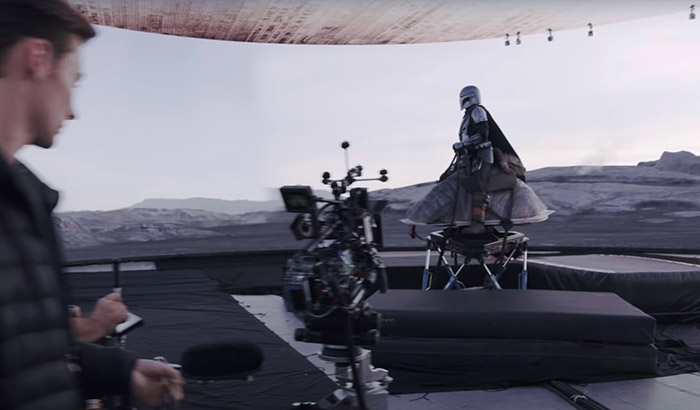

Such hybrid approach can be created either as a live preview and completed in post-production, or it can be intended as final pixel in camera. In more recent years, some studios have replaced chroma key sets with large LED walls where the colours emitted correspond to the background image composited live behind actors. Just as in hybrid green screen virtual production, where the background is replaced by an environment rendered live by the real-time engine, so to this occurs with the screen walls. However, instead of keying a green wall, the rendered background is transmitted to the screen wall and the composite becomes an in-camera single exposure process. Image 5 shows another hybrid example, this time resorting to LED walls. Once again citing Epic Games’ guide, “The use of image output from real-time engines to a live LED wall in combination with camera tracking to produce final-pixel imagery, completely in camera, represents the state of the art for virtual production.”.

Image 4 – Hybrid virtual production for live green screen compositing (Mozar, 2020).

Virtual Productions

This chapter is dedicated to virtual production in practice from the perspective of compositing as an individual industry branch. We will address types of productions, weight the advantages and disadvantages when compared to traditional productions, study real-case scenarios where virtual approaches were adopted and provide additional considerations with regards to the conducted scientific innovations.

Methodology

The virtual realm of film productions encompasses a wide range of methodologies and different solutions are applicable to different scenarios. So, it is possible to categorize virtual production in types, all sharing the common denominator of a real-time engine:

- Visualization - when talking about virtual production on any film studio, it almost certainly includes some form of prototyping of imagery created to convey the creative intent of a shot; this is called visualization. Amongst the many forms of visualization, such as pitchvis, previs, techvis, virtual scouting, etc., we highlight postvis, which involves the creation of imagery merging live-action elements with temporary visual effects.

- Hybrid Green Screen - in contrast with visualization, which helps planning shots, the hybrid method is used during recordings and corresponds to the live compositing techniques discussed in the previous chapter. Here, camera trackers provide data for the background to shift in perspective, creating perfectly synchronized parallax to the camera. Just like in visualization, the output can be viewed either on a screen or using virtual reality hardware for an improved spatial comprehension of the environment.

- Full Live - there is an additional channel for the output of hybrid virtual production, which has also been introduced and requires for the composited imagery to be final pixel quality and sent to a LED wall stage; this is called full live LED wall in-camera virtual production.

- Motion Capture - the process of recording the movements of objects or actors and using that data to animate digital models is called motion capture and, although some time in the past this was a category of visual effects in itself, it can now be integrated in the virtual production realm for its technological progress that allows the real-time use. Motion capture is usually distinguished by facial capture, performance capture and full-body capture.

There is a natural overlap between the different types of virtual production, and one is rarely used without the others. The truth is that these approaches are becoming so intrinsic to film production that modern scripts already assume some form of virtual integration. And even low-budget productions are starting to benefit, thanks to the expansion of commercial hardware. This means that virtual production is not limited to certain project types. In fact, one can argue that, if designed correctly, any film can take advantage of it. Nevertheless, there are two clearly distinguished types of production that adopt virtual production differently:

- Fully Animated - while in earlier times the use of rotoscoping was essential to produce animated movies, today, performance capture is its virtual production equivalent, in which the motion of an actor’s body and face are accurately sampled for transfer to digital characters and environments; this results in more realistic and lifelike animations and faster and more cost-effective workflows.

- Live-Action - in productions that include camera footage, virtual production is used to plan or directly enhance imagery to portray impossible situations or settings with veracity; major visual effects films increasingly rely on such technology, but the use of virtual production in live-action films has broaden to non-effects-driven projects.

Virtual Production in Reality

It has been established that the live view of composited elements on set (be it in final pixel quality or in a proxy resolution) is one major advantage of virtual production for filmmakers. Real-time render engines reduce the level of uncertainty linked to visual effects productions and remove many creative limitations that once compromised directors and film crews. Camera operators receive live feedback to perfectly frame all shot elements, production designers can instantly adapt computer generated images to on-set props and actors and stunts become safer and more controlled than if shot in real locations. This allows the film lifecycle to become a more interactive, non-linear and collaborative process. Visual effects artists that once were only called during post-production are now part of the filming crew, directors and actors discuss virtual elements as if they were real instead of imagining based on green screen props, and there is an overall increase in interactions amongst production departments.

The benefits of displaying images on LED walls as backgrounds for actors combined with camera tracking are valuable. Actors feel more integrated with the virtual world, resulting in more immersed performances. All the illumination and natural glare emitted by the screen directly influence the actors’ lighting, avoids colour mixing and glare from a traditional green screen and enhances the realism of the images. One use case that perfectly represents LED wall utility is the production of the series ‘The Mandalorian’ where the main character wears a reflective armour that would pose tremendous difficulties for compositors if used in green-screen sets (The Mandalorian 2019). Image 5 shows how images emitted by the walls are reflected on the armour and the actor becomes fully integrated in the scene.

There are, however, some disadvantages to the use of this technology. Like with any innovative solution, its costs are high and availability scarce. LED walls might not be within the budget of many productions, although some experts suggest that the adoption of virtual production can compensate in the long run depending on the context. The counterpart of being able to create the illusion that actors are in different environments in a matter of seconds is that they are limited to the space that the virtual production stage provides. Also, in terms of expertise required, the production crew must integrate additional professionals that once were not required to manage the infrastructure, from the real-time image processing units and the LED hardware to the spatial tracking and the live streaming systems.

Image 5 – Hybrid virtual production for live LED compositing (Seymour, 2020).

S. Lamouti, a technical manager at the visual effects company Neweb Labs, enumerates a few additional inconveniences of large screen sets (Neweb Labs 2021). Practical effects such as fire or explosions cannot be used near the walls and actors must be aware of what the manager calls ‘the road runner’ effect, where, like the coyote, it is possible to misinterpret the distance to the wall due to the images’ photorealism. Also, the sky and the floor limit the amount of possible camera movements, as the LEDs do not usually cover the roof and floors. Another relevant issue is the screens themselves, which can create a ‘moiré’ distortion resulting in an interference pattern in the final shot. Usually, it is advisable to keep a significant distance between the camera and the walls and setup the camera so that the background becomes not entirely focused. Other authors explore an additional challenge LED walls pose related to standardizing colour (James et al. 2021, 1-2). The authors discuss how to ensure the colours approved in pre-production match the ones emitted by the LEDs and captured by the camera.

Many films with visual effects of the past decade have increasingly considered this new production approach worthwhile. Here are some examples of projects that depended heavily on virtual production: ‘The Lord of the Rings’, ‘Harry Potter’, ‘Jurassic World’, ‘Star Wars’, ‘Pirates of the Caribbean’, ‘Transformers’, ‘Avatar’, ‘Life of Pi’, ‘Bohemian Rhapsody’ and others. Even films that have no special effects are largely released through virtual production. These are powered by companies specialized in virtual solutions and cutting-edge film technology such as Industrial Light and Magic, The Virtual Company, Animatrik, Hyperbowl, and PRG. These virtual production-based films represent the cinema industry’s shift towards a full technology dependency. Large productions have now teams dedicated to camera tracking, like Vicon’s collaboration with Industrial Light and Magic (Vicon 2021), and stage setup, such as Vu Studio’s LED volume (Vu Studio 2021). New layers of on-set roles and responsibilities are added, including on-set technical and creative support, virtual art departments, virtual backlots, and virtual asset development and management services.

A variety of workflows are emerging for virtual productions. These are driven by the desire to harness the most out of virtual technologies and conform to today’s limitations of the participants’ geographic locations due to the global pandemic. Remote collaborations are becoming more common and in fact this is one of the main reasons for the growth of virtual production itself. Other factors are pushing the adoption of virtual technologies by the cinema industry, namely: the popularity of visual-effects-heavy genres; the developments in game engines and consumer augmented and virtual reality hardware, mostly due to the gaming industry; and the competition between streaming platforms and film studios, as these are converging in terms of budget, talent, and visual quality, and are competing not only for customers, but also for content production resources including acting, technical talent and even production stages (Katz 2019).

Notwithstanding, virtual production workflows, be it remotely or in close collaboration, for film or series, include key infrastructure needed to produce in-camera visual effects and other goals, some of which have already been mentioned. For the stage, either green screens or LED display walls are required. The infrastructure includes LED modules, processors and rigging if the latter is chosen. High-end workstations, referred to as the ‘Brain Bar’, are also required for video assistance. A networking infrastructure is used for production and editorial to move media/loads; for data-intensive workflows like motion capture, an isolated network with high bandwidth switches is needed. Synchronization and time code hardware is also required to keep all acquired data in sync and lined up. Lens data (focus, iris and zoom) must be addressed to determine lens distortion, field of view and camera nodal point, and, for tracking, a real-time performance capture system must be chosen (Hibbitts 2020). Additional systems usually accompany the virtual arts department, such as the virtual camera and scouting systems.

At the heart of modern virtual production pipelines, a real-time engine acts as an incredibly powerful and flexible tool, from previs to final render. With the adoption by most of the previously mentioned leading companies in virtual production, Unreal Engine is at the forefront of virtual technologies for filmmaking, leading the charge as it continues to change nearly every aspect of the production process (Epic Games 2021). Throughout the past decade, Epic Games has expanded beyond visualization, where Unreal Engine was used for final asset creation and environment conception, to supporting motion tracking systems for capture and animation, developing an in-camera visual effects toolset and means to achieve live compositing even establishing a video editing framework that allows for the entire production to never require external software to produce the final edit. Moreover, the company is bringing this reality to general public through the release of the same tools used in large productions as open access plugins for the game engine and the provision of the Virtual Production Primer, an online course, and high-quality assets on Unreal Marketplace and Quixel Megascans at the reach of indie filmmakers (Kadner 2021, vol. 2). In fact, there has been an increase in efforts looking for means to reduce the costs of virtual production and expand the usage for lower-budget television productions and independent filmmakers. Unity has a dedicated section for filmmaking aspects of its game engine (Unity Technologies 2021). The Institute of Animation of German’s Filmakademie Baden Württemberg has been working on commercial-level virtual production tools through the European Union funded research project Dreamspace (Animations Institute 2021).

The Scientific Novelty

Even though virtual production has become a hot topic amongst the film industry, this has not directly translated into an equally large growth in the scientific community. Throughout this study, the amount of relevant scientific works found was scarce and a need to formally and scientifically address these new technologies, production workflows and compositing techniques was discovered.

The work of O. Priadko and M. Sirenko provides a thorough analysis on recent research and publications on the topic but struggles to explore it in depth (Priadko and Sirenko 2021). The previously mentioned Dreamspace project also provides scientific contributions, presenting a platform for collaborative virtual production (Grau et al. 2017) and providing access to the open-source Virtual Production Editing Tools software (Spielmann et al. 2018). The same institute released additional papers on consumer devices for virtual production (Helzle and Spielmann 2017), the use of augmented reality in virtual production (Spielmann et al. 2018) and set and lighting tools for virtual production (Trottnow et al. 2015). Other authors present XRStudio (Nebeling et al. 2021), a virtual production and live streaming system not directed towards filmmaking but nonetheless applicable in such context as an instructional tool.

A set of additional sources were found amongst the scientific indexes, but their access is limited, and it was not possible to analyze its content for this study. Nevertheless, a reference is made to the most relevant ones for future research purposes. The authors of the book ‘Pioneers in Machinima’, released just recently (Harwood 2021), use the term machinima when referring to machine-cinema, a concept that describes the works of technology and machines applied to the cinema industry. In it, they use the most culturally significant machinima works as lenses to trace the history and impacts of virtual production. The Moving Picture Company, one of the most successful visual effects companies of today, created a new virtual production platform in collaboration with Technicolour called Genesis, powered by Unity (Moving Picture Company 2021). Much like Epic Games’ tools, Genesis provides all the means to apply virtual production techniques in films and series, with some films like the latest versions of ‘The Jungle Book’ and ‘The Lion King’ resorting to it. The company has published a few works on Genesis exploring their pipeline for virtual production (Tovell and Williams 2018) (Giordana et al. 2018) but leaves regular users of Unity desiring for open integration with the game engine. The teams from Industrial Light.; Magic and Digital Domain present an insight on the creation of the virtual world of Steven Spielberg’s film ‘Ready Player One’ through virtual production (Cofer et al. 2018). Finally, K. Ilmaranta examines the ways virtual technologies have affected on the arrangement of the cinema space (Ilmaranta 2020). She focuses on “how the concept of digital cinema has evolved” and how it “turned into manifesting an essential hookup between the filmmakers and digital content” while explaining the blend of borders between pre-production, production and post-production translated into virtual production.

Even though these works provide insights on virtual production from perspectives different from the business one, it would be valuable to conduct scientific studies on the real impact of such technological transformations in the film industry. Entities would benefit from studies comparing traditional and virtual productions in terms of department interaction and production speed and costs. It was suggested in multiple sources that the visual effects department benefits highly with virtual technologies, but scientific analysis have not yet been conducted, to the extent of our knowledge. User evaluations could also be interesting to better comprehend under what circumstances do the advantages of live LED walls outweigh those of hybrid green screen real-time composites, or even if virtual and augmented reality devices are indeed only useful for initial stages such as location scouting and previs.

Conclusion

Cinematography has, ever since its birth, been linked to the creation of fictitious worlds and the representation of realities hard to replicate. Compositing techniques have allowed filmmakers to come up with visual illusions that convey the desired message and immerse the audience into each story. The growth of compositing techniques has accompanied the technological innovations of each generation of films and many solutions emerged and died throughout time. But some became such reliable creative approaches, like the green screen or motion capture, that their value and precision only increased.

When visual effects achieved a level of photorealism undistinguishable from reality, the film industry seemed to have reached a limit in terms of visual quality. However, as the virtual production techniques used for visualization consolidated themselves in the market and computer graphics became more and more capable of rendering high quality images in real-time, a new opportunity came forth to filmmakers and live compositing started to become a reality, with technological achievements such as the full live LED wall as the perfect representation of the state-of-the-art. Now, the same compositing techniques used for decades can be applied without creative limitations and at their full potential. The increase in department interactions and in creative freedom and the support for remote collaboration made virtual production cause the deepest changes in the production workflows the cinema and television industries have ever witnessed.

The expansion of virtual technologies has increased in the past years, especially with the limitations imposed by the global pandemic, which can partially explain the gap between the business and the scientific communities. This growth seems to be exponential, so future predictions might be considered only as guesses. Nevertheless, it is possible to provide educated guesses based on the opinion of experts in the field and on the technological innovations expected to be available soon. The achievement of real-time raytracing solutions will be a reality within this decade, with some demos already being published before 2020 (Moran and Leo 2018). And these solutions will be supported by the next generation of graphics cards. Also, the next version of Unreal Engine promises to disrupt real-time photorealistic scene rendering with features such as Nanite and Lumen (Epic Games UE5 2021), pushing final-pixel in-camera quality beyond highly budgeted productions. Indeed, it is expected that the adoption of virtual production techniques expands to indie filmmakers quickly (Allen 2019). Other technologies related to artificial intelligence or volumetric video are yet to reveal their full impact on the film industry, but we will most likely not have to wait too long to find out.

Bibliography

Leeder, Murray. 2017. The Modern Supernatural and the Beginnings of Cinema. London: Palgrave Macmillan. DOI: 10.1057/978-1-137-58371-0_4.

Vlahos, Petro. 1964. Composite Colour Photography. United States Patent US3158477A. Motion Picture Res Council Inc. Available at: https://patents.google.com/patent/US3158477. Last access on 27/01/2022.

Modern Times. (1936). Directed by Charles Chaplin. United Artists production.

Fry, Ron and Fourzon, Pamela. 1977. The Saga of Special Effects: The Complete History of Cinematic Illusion. USA: Prentice Hall. ISBN: 0137859724.

The Matrix. (1999). Directed by Lana and Lilly Wachowski. Joel Silver Production.

Fleischer, Max. 1915. Rotoscope: Method of Producing Moving-Picture Cartoons. United States Patent US1242674A. Available at: https://worldwide.espacenet.com/patent/search/family/003310473/publication/US1242674A?q=pn%3DUS1242674. Last access on 27/01/2022.

Menache, Alberto. 2000. Understanding Motion Capture for Computer Animation and Video Games. USA: Morgan Kaufmann. ISBN: 9780124906303.

The Hobbit Trilogy. (2012). Directed by Peter Jackson. Warner Bros. Pictures.

Kadner, Noah. 2019. The Virtual Production Field Guide. USA: Epic Games. Available at: https://unrealengine.com/en-US/vpfieldguide. Last access on 27/01/2022.

Mozar, Amir. 2020. What is Virtual Production in Animation and VFX Filmmaking and its Benefits. The Virtual Assist. Available at: https://thevirtualassist.net/what-is-virtual-production-vp-filmmaking-benefits/. Last access on 06/02/2022.

Star Wars The Mandalorian. (2019). Directed by Jon Favreau. Lucasfilm, Fairview Entertainment and Golem Creations.

Seymour, Mike. 2020. Art of LED Wall Virtual Production. FX Guide. Available at: https://fxguide.com/fxfeatured/art-of-led-wall-virtual-production-part-one-lessons-from-the-mandalorian/. Last access on 06/02/2022.

Neweb Labs. 2021. The Pros and Cons of LED Screens. Canada. Available at: https://neweblabs.com/led-screen-pros-cons. Last access on 08/02/2022.

James, Oliver and Achard, Rémi and Bird, James and Cooper, Sean. 2021. Colour-Managed LED Walls for Virtual Production. USA: Association for Computing Machinery SIGGRAPH. Article 19, 1-2. DOI: 10.1145/3450623.3464682.

Vicon. 2021. ILM Case Study: Breaking new ground in a galaxy far, far away. United Kingdom: Vicon. Available at: https://vicon.com/cms/wpcontent/uploads/2021/04/ILM _Case_Study_Final-1.pdf. Last access on 08/02/2022.

Vu Studio. 2022. Virtual Production Campus. USA: Vu Studio. Available at: https://vustudio.com/. Last access on 08/02/2022.

Katz, Brandon. 2019. Hollywood Is Running Out of Room, and It Might Be Hurting Your Favorite Movies Most. USA: Observer. Available at: https://observer.com/2019/11/disney-netflix-universal-warner-bros-hollywood-soundstage-space/. Last access on 08/02/2022.

Hibbits, David. 2020. Choosing a real-time performance capture system. USA: Epic Games. Available at: https://cdn2.unrealengine.com/Unreal+Engine%2Fperformance-capture-whitepaper%2FLPC_Whitepaper_final-7f4163190d9926a15142eafcca15e8da5f4d0701.pdf. Last access on 08/02/2022.

Epic Games. 2021. Virtual Production Hub: the Future of Filmmaking. USA: Epic Games. Available at: https://unrealengine.com/virtual-production. Last access on 08/02/2022.

Kadner, Noah. 2021. The Virtual Production Field Guide Volume 2. USA: Epic Games. Available at: https://cdn2.unrealengine.com/Virtual+Production+Field+Guide+Volume+2+v1.0-5b06b62cbc5f.pdf. Last access on 08/02/2022.

Unity. 2022. Film, Animation and Cinematics. USA: Unity Technologies. Available at: https://unity.com/solutions/film-animation-cinematics. Last access on 08/02/2022.

Animations Institute. 2022. Dreamspace Project. Germany: Filmakademie Baden-Württemberg. Available at: https://animationsinstitut.de/de/forschung/projects/virtual-production/dreamspace. Last access on 09/02/2022.

Priadko, Оleksandr and Sirenko, Maksym. 2021. Virtual Production: a New Approach to Filmmaking. Russia: Bulletin of Kyiv National University of Culture and Arts. Series in Audiovisual Art and Production, pp.52-58. DOI: 10.31866/2617-2674.4.1.2021.235079.

Grau, O. and Helzle, V. and Joris, E. and Knop, T. and Michoud, B. and Slusallek, P. and Bekaert, P. and Starck, J. 2017. Dreamspace: a Platform and Tools for Collaborative Virtual Production. SMPTE Motion Imaging Journal, 126, pp.29-36. DOI: 10.5594/JMI.2017.2712358.

Spielmann, Simon and Schuster, Andreas and Götz, Kai and Helzle, Volker. 2018. VPET - Virtual Production Editing Tools. SIGGRAPH Emerging Technologies. DOI: 10.1145/3214907.3233760.

Helzle, Volker and Spielmann, Simon. 2017. Next Level Consumer Devices for Virtual Production. Available at: https://animationsinstitut.de/files/public/images/04-forschung/Publications/VPET_CVMP2017.pdf. Last access on 19/02/2022.

Trottnow, Jonas and Götz, Kai and Seibert, Stefan and Spielmann, Simon and Helzle, Volker. 2015. Intuitive virtual production tools for set and light editing. Proceedings of the 12th European Conference on Visual Media Production, 6, pp.1-8. DOI: 10.1145/2824840.2824851.

Nebeling, Michael and Rajaram, Shwetha and Wu, Liwei and Cheng, Yifei and Herskovitz, Jaylin. 2021. XRStudio: A Virtual Production and Live Streaming System for Immersive Instructional Experiences. In Proceedings of the Conference on Human Factors in Computing Systems. Association for Computing Machinery, New York, USA, 107, pp.1-12. DOI: 10.1145/3411764.3445323.

Harwood, Tracy and Grussi, Ben. 2021. Pioneers in Machinima: the Grassroots of Virtual Production. Vernon Press. ISBN: 978-1-62273-273-9.

Moving Pictures Company. 2021. Genesis: Virtual Production Platform. MPC R&D and Technicolor. Available at: https://mpc-rnd.com/technology/genesis. Last access on 19/02/2022.

Tovell, Robert and Williams, Nina. 2018. Genesis: a pipeline for virtual production. Proceedings of the 8th Annual Digital Production Symposium. Association for Computing Machinery, New York USA, 7, pp.1-5. DOI: 10.1145/3233085.3233090.

Giordana, Francesco and Efremov, Veselin and Sourimant, Gaël and Rasheva, Silvia and Tatarchuk, Natasha and James, Callum. 2018. Virtual production in ‘book of the dead’: technicolor’s genesis platform, powered by unity. SIGGRAPH Real-Time Live. DOI: 10.1145/3229227.3229235.

Cofer, Grady and Shirk, David and Dally, David and Meadows, Scott and Magid, Ryan. 2018. Three keys to creating the world of “ready player one” visual effects & virtual production. SIGGRAPH Production Sessions. Association for Computing Machinery, New York, USA, 9, pp.1. DOI: 10.1145/3233159.3233168.

Ilmaranta, Katriina. 2020. Cinematic Space in Virtual Production. International Conference on Augmented / Virtual Reality and Computer Graphics, pp. 321-332. Available at: https://academia.edu/44002310/Cinematic_Space_in_Virtual_Production. Last access on 19/02/2022.

Moran, Gavin and Leo, Mohen. 2018. The ‘reflections’ ray-tracing demo presented in real time and captured live using virtual production techniques. SIGGRAPH Real-Time Live. Association for Computing Machinery, New York, USA, 8, pp.1. DOI: 10.1145/3229227.3229230.

Epic Games UE5. Unreal Engine 5: Early Access. 2021. Available at: https://unrealengine.com/en-US/unreal-engine-5. Last access on 19/02/2022.

Allen, Damian. 2019. Virtual Production: It’s the future you need to know about. Pro Video Coalition. Available at:https://provideocoalition.com/virtual-production-its-the-future-you-need-to-know-about. Last access on 19/02/2022.